# the next line increases the verbosity of Linda output messages export GAUSS_LFLAGS= "-v "ĭate # put a date stamp in the output file for timing/scaling testing $SLURM_JOBID # Always specify a scratch directory on a fast storage space (not /home or /projects!) export GAUSS_SCRDIR=/scratch/summit/ $USER/ $SLURM_JOBID # the next line prevents OpenMP parallelism from conflicting with Gaussian's internal parallelization export OMP_NUM_THREADS=1 #!/bin/bash #SBATCH -job-name=g16-test #SBATCH -partition=shas #SBATCH -nodes=2 #SBATCH -ntasks-per-node=1 #SBATCH -cpus-per-task=24 #SBATCH -time=00:50:00 #SBATCH -output=g16-test.%j.outįor n in `scontrol show hostname | sort -u ` do echo $-opaĭone | paste -s -d, > tsnet.nodes. Per node, with multiple SMP cores per node. Summit, your job will need to span multiple nodes using the Linda In order to run on more than 24 cores in the "shas" partition on Mkdir $GAUSS_SCRDIR # only needed if using /scratch/summitĭate # put a date stamp in the output file for timing/scaling testing if desired # Always specify a scratch directory on a fast storage space (not /home or /projects!) export GAUSS_SCRDIR=/scratch/summit/ $USER/ $SLURM_JOBID # or export GAUSS_SCRDIR=$SLURM_SCRATCH # alternatively, to use the local SSD max 159GB available # the next line prevents OpenMP parallelism from conflicting with Gaussian's internal SMP parallelization export OMP_NUM_THREADS=1 #!/bin/bash #SBATCH -job-name=g16-test #SBATCH -partition=shas #SBATCH -nodes=1 #SBATCH -ntasks-per-node=24 #SBATCH -time=00:50:00 #SBATCH -output=g16-test.%j.out Scale, as there may be diminishing returns beyond a certain number of You should test your G16Ĭomputations with several different core counts to see how well they This value should correspond to the number of cores YouĬan specify how many cores to use via the '-p' flag to g16

Many G16 functions scale well to 8 or more cores on the same node. Parallel jobs Single-node (SMP) parallelism The amount of memory your Slurm job has requested. Support computations on even modest-sized molecules. Support the amount of memory that needs to be allocated to efficiently The default dynamic memory request in G16 is frequently too small to If you create a Gaussian input file named h2o_dft.com then you canĮxecute it simply via g16 h2o_dft. Performance will be dramatically reduced. If this directory is in /projects or /home, then your job's Scratch files will be created in whatever directory G16 is run from ( /scratch/summit/$USER on Summit or rc_scratch/$USER on Blanca.) If GAUSS_SCRDIR is not set, then the These should always be on a scratch storage system However, it is important to specify GAUSS_SCRDIR to tell G16 where Not need to source g16.login or g16.profile if running single-node jobs, but if you are running multi-node parallel jobs you will need to add source $g16root/g16/bsd/g16.login (tcsh shell) or source $g16root/g16/bsd/g16.profile (bash shell) to your job script after you load the Gaussian module. Nearly all necessaryĮnvironment variables are configured for you via the module.

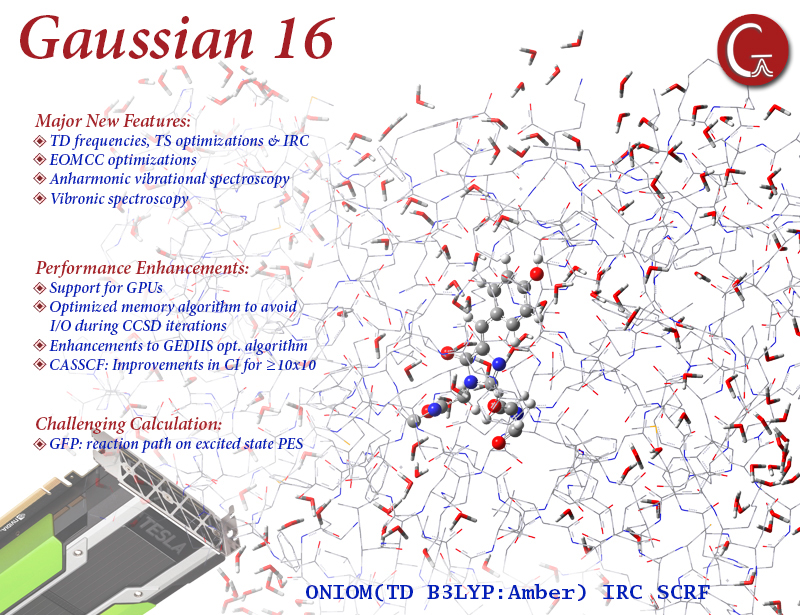

Parallel gaussian software software#

To set up your shell environment to use G16, load a Gaussian software Good general instructions can be found atĪre needed when running on Summit.

Parallel gaussian software how to#

It does not attempt to teach how to use Gaussian for solving

This document describes how to run G16 jobs efficiently on Rights, and citation information shown at the top of your Gaussian

Universities that have purchased Gaussian licenses. Important: Gaussian is available on Summit only to members of

0 kommentar(er)

0 kommentar(er)